A big shout-out to Faiz Muhammad for completing his master’s thesis titled “Prediction of Camera Calibration and Distortion Parameters Using Deep Learning and Synthetic Data” at AILiveSim. Special thanks to Dr. Fahad Sohrab from Tampere University, who supervised the thesis on the university’s behalf, and to Dr. Farhad Pakdaman, also from Tampere University, for his continuous support throughout the project. A warm thanks as well to all our colleagues at AILiveSim for their support, especially to Pierre Corbani, who helped develop the software.

In his master’s thesis, Faiz explored the calibration process for linear cameras utilizing the Brown-Conrady lens distortion model, a crucial task in many applications related to computer vision and robotics. What sets Faiz’s work apart is the application of deep learning techniques to single-image camera calibration without the need for special calibration objects. The approach extracts both the camera intrinsic parameters, such as the focal length and the principal point, as well as the lens distortion parameters, including radial and tangential distortion coefficients. The results were validated using both synthetic and real-world data, demonstrating the robustness and accuracy of the method.

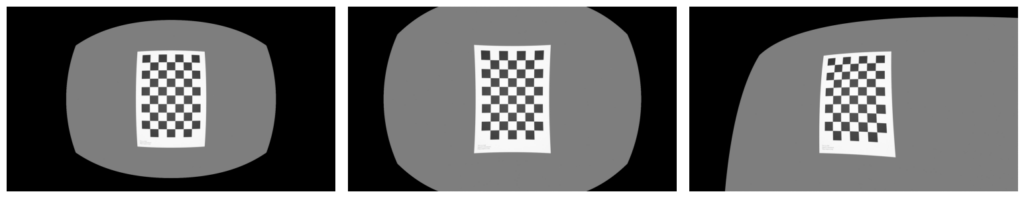

The following image illustrates, from left to right, barrel, pincushion, and tangential distortions. Barrel and pincushion distortions are modeled by the radial distortion components, particularly the second-order term, while tangential distortions are explained by the tangential distortion components.

The Importance of Camera Calibration

Camera calibration is essential in applications that require precise 3D information to be extracted from images. Following techniques, among others, can be used for extracting such 3D information:

- 3D Reconstruction from Stereo Images

- Simultaneous Localization and Mapping (SLAM)

- Structure from Motion (SFM)

- Shape from Shading

These techniques require that both the intrinsinc parameters and the lens distortion model parameters of the camera are known if precise 3D information is to be extracted. Thus, proper camera calibration is a crucial step in ensuring the reliability and quality of 3D estimations.

Challenges In Camera Calibration

Typically, camera calibration involves capturing images of a known object and observing how it projects onto the camera’s image plane. The goal is to minimize the reprojection error between observed and predicted image points, which allows for the accurate estimation of how light rays propagate through the camera’s optical system. While the process of camera calibration might sound straightforward — simply capturing images of the calibration object from various positions — in practice, it requires careful execution. Improper calibration can result in parameters that do not accurately model the optical system, leading to poor-quality 3D estimations.

Another challenge arises when datasets have been captured with uncalibrated cameras and later on these datasets are to be used for testing, or training of networks, where calibration is required. Retrospectively estimating the camera parameters can be challenging, depending on the nature of the images that have been captured, especially if the original camera is no longer accessible.

Impact

So, what does this mean for AILiveSim’s customers? Once integrated into the product, this innovative calibration feature will empower customers to easily generate training and testing images that align with the geometry of the physical cameras used in their final products. This will significantly minimize the out-of-distribution problem, ensuring that the development images are geometrically almost identical to those encountered in real-world applications. The result? More reliable and accurate outcomes, leading to superior product performance and a competitive edge in the market.

#DeepLearning #ArtificialIntelligence #ComputerVision #ailivesim