Introduction

NVIDIA’s Deepstream, based on GStreamer, enables the construction of complex image processing pipelines used in video analytics. These pipelines typically consist of various interdependent stages. For instance, in 3D reconstruction using stereo cameras, images are typically rectified before calculating depth estimates. Image rectification in this context involves removing lens distortions and transforming images so that objects projected onto the camera planes are aligned on corresponding horizontal lines in the left and right images. An example of this type of image rectification is provided later in this article. Another type of rectification, related to the alignment of crops or regions of interest (ROI), is the main focus of this article. At the time of writing, Deepstream lacks functionality for this kind of crop alignment.

Video analytics systems consist of different subsystems, often running on various hardware platforms. Therefore, in addition to elements performing image analysis, image processing pipelines need components that allow video and metadata to be sent to different locations. Considering the complexity of these pipelines, the ability to effortlessly construct, configure, and reconfigure them, while swapping out components as necessary, is highly beneficial.

As mentioned earlier, NVIDIA’s Deepstream SDK is based on the GStreamer framework. GStreamer is an open-source multimedia framework used to construct graphs of media-handling components. These components can be plug-ins, each responsible for a single task, which can be connected to form a pipeline. Alternatively, they can be collections of plug-ins working together. Each plug-in serves a specific function in the pipeline, and in some cases, multiple plug-ins can achieve the same functionality. Deepstream adheres to this design philosophy. Since Deepstream is based on GStreamer, many existing GStreamer plug-ins can be used in our pipelines, eliminating the need to reinvent the wheel. Another significant benefit is the availability of bindings for different programming languages, allowing us to create and control pipelines using these languages.

In a Deepstream pipeline, image analysis occurs in GPU Inference Engines (GIE). Inference involves using a trained neural network model to make predictions based on input data, which in this case are images. Broadly, GIEs can operate in two modes: primary and secondary. Primary GIEs process the entire input image, while secondary GIEs focus on regions of interest (ROI) extracted by the primary GIEs. These regions of interest are also referred to as crops. Imagine you are trying to recognize license plates. In this scenario, the primary GIE detects vehicles and generates bounding boxes around them, along with landmarks indicating the location and orientation of the license plate. The secondary GIE then operates solely on the crop defined by the bounding box, extracting the numbers and letters from the area specified by the landmarks.

Image Rectification

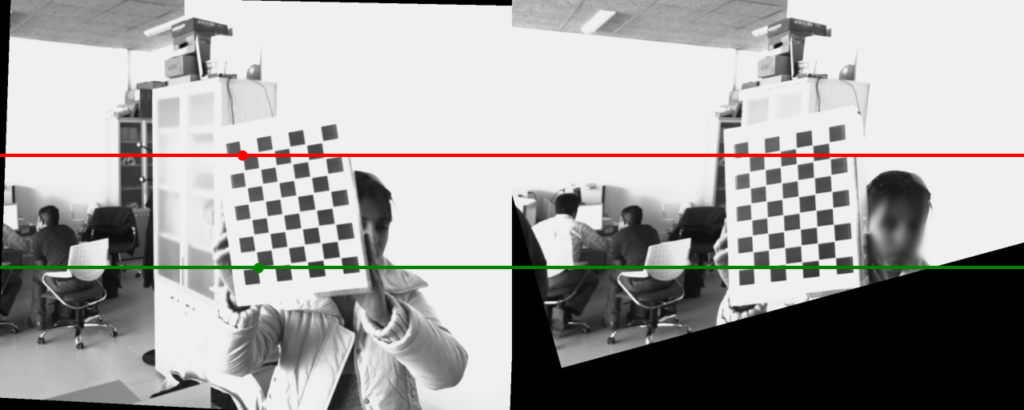

As mentioned in the introduction, many image processing tasks require or benefit from geometric transformations like rectification. An example related to removing lens distortions and epipolar rectification in stereo-camera 3D reconstruction was provided. While working on my PhD thesis, I developed an image rectification toolbox for rectifying stereo images. The figure below shows an example. For visualization purposes, both rectified images have been combined into a single image.

To test the toolbox under severe conditions, I oriented the cameras differently and captured an image of a camera calibration pattern. In the left image, there are two circles, one on the red line and one on the green line. Information corresponding to the circles can be found along the epipolar lines in the right image. This type of rectification can be considered a preprocessing function: each incoming image is transformed the same way unless the camera geometries or lenses change. The transformations are typically parametric, and a lookup table (LUT) defining the transformation is either calculated when the system starts or once and stored in non-volatile memory for later access.

ROI/Crop Alignment

Some image processing tasks involve geometrically transforming only a smaller part of the image, called a crop, to make subsequent tasks easier, faster, or both. An example related to license plate recognition was provided. In this case, the primary GIE detects vehicles, while the secondary GIE performs optical character recognition using the crops defined by the bounding boxes generated by the primary GIE. The figure below shows the bounding box of the detected vehicle (in red) and the landmarks that define the location of the license plate (in green).

If the crop is aligned so that the text on the license plate is horizontal, the secondary GIE can more easily recognize the characters, simplifying its design. Crop alignment is typically based on landmarks extracted by the primary GIE. The required transformation parameters are calculated separately for each crop. The following image shows the license plate after rectification.

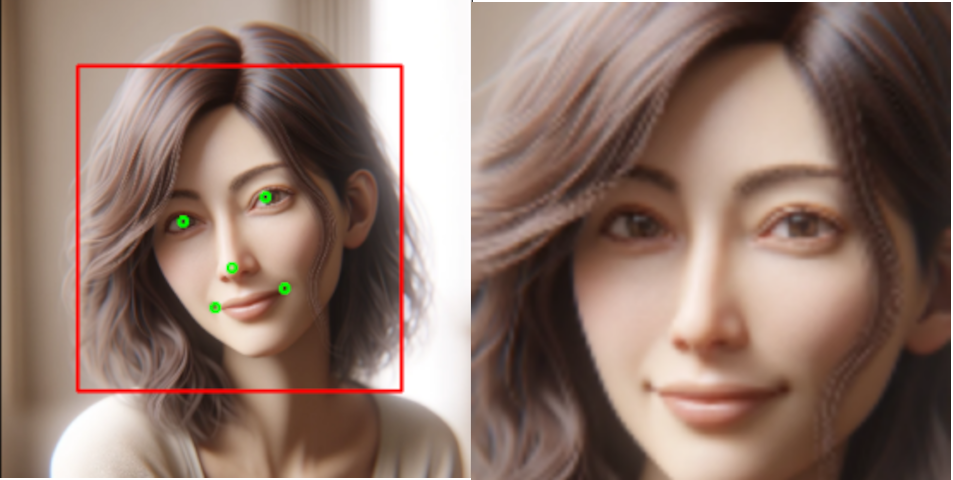

Many image processing tasks can benefit from this type of alignment, which transforms the object of interest into a canonical location and orientation. Another example is related to access entry or security applications. In facial recognition or gender and age detection, recognition rates improve when the face is aligned so that the facial landmarks in the perceived image match those in the canonical location. The figure below demonstrates this.

The left image shows the crop defined by the red box and the landmarks with green dots, while the right image shows the aligned face.

Processing Speed

Video analytics solutions running on IoT devices, like smart cameras or edge devices, lack the computational power of dGPUs found in servers. However, if done correctly, crop alignment is relatively inexpensive computationally. Aligning crops so that features match those in the canonical location generally allows for the use of neural networks with fewer weights. This not only enhances system throughput but also reduces initial hardware costs.

Our Solution

As mentioned earlier, at the time of writing, Deepstream does not natively support ROI/crop alignment. However, at Einherjar Consulting, we’ve taken on this challenge and developed a solution to align ROIs/crops before inference. Our implementation leverages Deepstream’s native image transformation resources, ensuring maximum efficiency. While the concept of crop alignment is simple, the details are crucial. Ensuring the correct timing of operations is essential to maximize throughput. Additionally, various types of transformations, such as similarity and affine transformations, and perspective warps, add to the complexity. Getting all of these aspects right can be tricky. Our solution has been rigorously tested on both dGPUs and Jetsons, proving its robustness and versatility. If you’re interested in learning more about how our solution can improve your image processing pipelines, don’t hesitate to reach out!

#EinherjarConsulting #DeepLearning #Deepstream #VideoAnalytics